In this article author will cover Knative Serving, which is responsible for deploying and running containers, also networking and auto-scaling. Auto-scaling allows scale to zero and is probably the main reason why Knative is referred to as Serverless platform. By haralduebele.

Knative uses new terminology for its resources. They are:

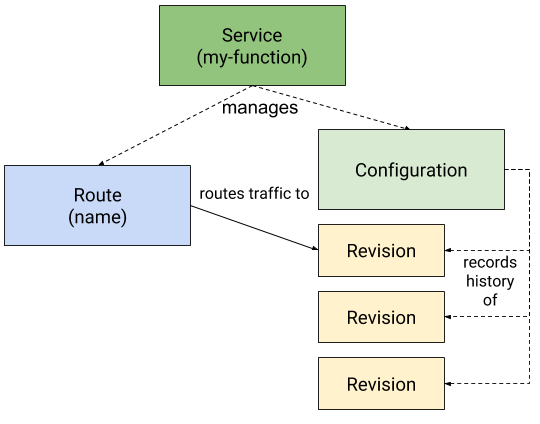

- Service: Responsible for managing the life cycle of an application/workload. Creates and owns the other Knative objects Route and Configuration.

- Route: Maps a network endpoint to one or multiple Revisions. Allows Traffic Management.

- Configuration: Desired state of the workload. Creates and maintains Revisions.

- Revision: Specific version of a code deployment. Revisions are immutable. Revisions can be scaled up and down. Rules can be applied to the Route to direct traffic to specific Revisions.

Source: https://knative.dev/

Knative workloads: In contrast to general-purpose containers(Kubernetes), stateless request-triggered (i.e. on-demand) autoscaled containers have the following properties:

- Little or no long-term runtime state (especially in cases where code might be scaled to zero in the absence of request traffic)

- Logging and monitoring aggregation (telemetry) is important for understanding and debugging the system, as containers might be created or deleted at any time in response to autoscaling

- Multitenancy is highly desirable to allow cost sharing for bursty applications on relatively stable underlying hardware resources

To lern more read the article in full. You will also get access to code repository with example code. Excellent for anybody migrating from Kubernetes to Knative.

[Read More]